AI automation promises significant efficiency gains, but many businesses discover their infrastructure can't support it only after investing time and resources. The problem isn't usually the AI tools themselves: it's the foundation they're built on.

We've worked with businesses across various sectors, and we've noticed a pattern. Companies rush to adopt AI-powered automation without checking whether their data infrastructure can handle it. The result? Failed pilots, frustrated teams, and wasted budgets.

Let's explore five data problems that commonly derail AI initiatives and practical ways to address them before they become expensive mistakes.

1. Poor Data Quality and Organization

AI models are only as good as the data we feed them. If our datasets are incomplete, inconsistent, or poorly organized, even the most sophisticated AI won't deliver accurate results.

This isn't just about having "enough" data: it's about having the right data in the right format. Many businesses discover their historical records contain gaps, duplicate entries, or conflicting information across different systems. When AI tries to learn from this messy foundation, it produces unreliable outputs that can't be trusted for critical decisions.

How to fix it: Start with a data audit. We need to identify what data exists, where it lives, and whether it's fit for purpose. This means establishing clear standards for data entry, implementing validation rules, and regularly cleaning existing datasets. It's not glamorous work, but it's essential. Consider appointing data stewards within each department who understand both the business context and quality requirements.

2. Inadequate Data Governance Policies

Without clear governance frameworks, data becomes increasingly difficult to manage as AI workloads scale. We've seen organizations where different teams use different naming conventions, storage locations, and access protocols. This creates chaos when trying to implement enterprise-wide AI solutions.

Data governance isn't just bureaucracy: it's about establishing who owns what data, who can access it, how it should be used, and how long it should be retained. These questions become critical when AI systems start making decisions based on that data.

How to fix it: Develop a data governance framework before launching AI initiatives. This should define clear ownership, establish data classification levels, and create approval processes for AI model training. We recommend starting small with a pilot department, refining the approach, then scaling across the organization. The framework should be practical and enforceable, not just a document that sits unused.

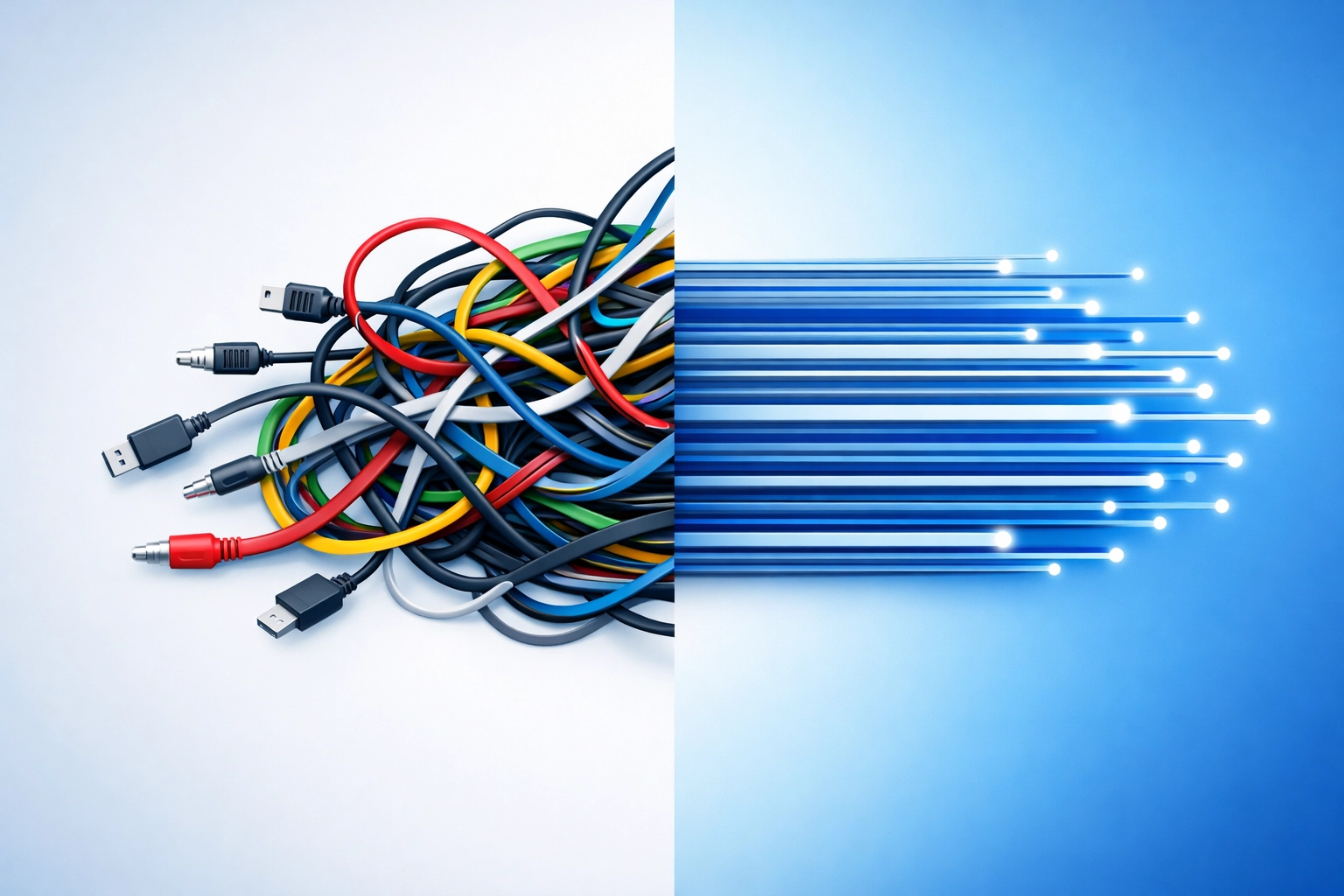

3. Insufficient Data Integration

AI systems depend on seamless integration of data from various sources. When information sits in disconnected silos: customer data in one system, financial data in another, operational data somewhere else: AI can't see the complete picture.

Many businesses run legacy systems that weren't designed to talk to each other. Adding modern AI tools on top of this fragmented infrastructure creates integration nightmares. The AI might have access to some data but miss crucial context from other systems, leading to incomplete or misleading insights.

How to fix it: We need to map data flows across our organization and identify integration gaps. Modern middleware and API-based solutions can bridge legacy systems without requiring complete infrastructure overhauls. Cloud-based data warehouses provide centralized locations where data from multiple sources can be normalized and made accessible to AI tools.

The key is starting with business priorities. Which processes would benefit most from AI automation? Focus integration efforts there first, rather than trying to connect everything simultaneously.

4. Infrastructure Limitations

AI processing demands can quickly overwhelm infrastructure that handles traditional workloads just fine. We're talking about significant requirements for storage capacity, processing power, and network bandwidth.

Training AI models and running real-time automation requires substantial computational resources. If our infrastructure can't scale to meet these demands, AI performance suffers: or worse, it impacts other business operations.

How to fix it: Conduct an infrastructure capacity assessment before deploying AI solutions. This should evaluate current utilization levels and project AI-related demands. We might discover our on-premises setup can't scale cost-effectively, making cloud platforms a better option for AI workloads.

Hybrid approaches often work well: keeping sensitive data on-premises while leveraging cloud resources for intensive AI processing. The important thing is planning for scalability from the start. Network infrastructure that supports automated workflows needs to handle high data volumes without manual intervention.

5. Weak Backup and Disaster Recovery Procedures

AI-dependent workflows create new vulnerabilities. When automation handles critical processes, system failures or data loss have immediate and severe impacts. Yet many backup strategies were designed for traditional IT environments and don't account for AI-specific requirements.

AI models themselves need protection: not just the data they process. Losing trained models means losing months of refinement and optimization. We've seen businesses lose significant investment when inadequate backup procedures failed to protect their AI assets.

How to fix it: Review backup and disaster recovery plans with AI workflows in mind. This includes:

- Regular backups of trained AI models and their configurations

- Version control for model iterations

- Redundancy for data sources that feed AI systems

- Testing recovery procedures specifically for AI-dependent processes

- Clear recovery time objectives that account for AI system restoration

We can't afford single points of failure in AI-enhanced operations. Redundancy and robust recovery procedures aren't optional extras: they're fundamental requirements.

Taking Action: The AI Readiness Audit

Before investing heavily in AI automation, we recommend conducting a structured readiness audit. This assessment identifies infrastructure gaps and data problems while they're still manageable.

An effective audit evaluates:

- Current data quality, completeness, and organization

- Existing governance policies and enforcement mechanisms

- Integration capabilities across systems

- Infrastructure capacity and scalability options

- Backup and disaster recovery adequacy

This isn't about achieving perfection before starting. It's about understanding our baseline and addressing critical gaps that would otherwise cause AI initiatives to fail.

The Provider-Agnostic Advantage

One challenge we consistently see is businesses locked into single-vendor solutions that don't integrate well with their existing infrastructure. When evaluating AI readiness, it's crucial to maintain flexibility.

A provider-agnostic approach means we can recommend solutions based on what actually works for our specific situation: not what a particular vendor happens to sell. This honest assessment often reveals that incremental improvements to existing infrastructure deliver better results than complete platform replacements.

Moving Forward

AI automation offers genuine benefits, but only when built on solid foundations. These five data problems aren't rare edge cases: they're common challenges that affect most organizations to some degree.

The good news? They're all fixable with proper planning and structured approaches. We don't need perfect data or unlimited infrastructure budgets. We need realistic assessments of our current state and practical roadmaps for improvement.

If you're considering AI automation, start with an honest evaluation of your data infrastructure. Identify problems early, address them systematically, and build AI solutions on foundations that can actually support them.

Need help assessing your AI readiness? Get in touch to discuss how we can evaluate your infrastructure and develop a practical roadmap for AI adoption.